Improve Integration Tests With GitHub Action Service Containers

We explore how GitHub Action Services can improve integration tests, have better performance than docker layer caching, cut build times, and are better for parallel tests that run on seperate runners.

Setting The Scene

Typically integration test suites require a set of temporary docker services to connect with such as a relational database, or some caching service.

We tend to set up services via a docker-compose.yml file - so integration tests can have services exposed to them and be torn down easily.

How Can We Improve On This?

We can explore GitHub service containers!

They allows us to specify docker images and which ports to expose to the github runner that is running your test suite. The syntax is almost identical to a docker compose yml file. The only differences I found were declaring env variables and other nuances such as specifying bespoke health check parameters.

Why Use Github Action Service Containers?

- No need to write you own health check scripts

GitHub Actions does this for you.

Some services such as elastic search offer extra health check options which you specify under env

elasticsearch:

image: docker.elastic.co/elasticsearch/elasticsearch:7.1.1

ports:

- 9200:9200

- 9300:9300

env:

discovery.type: single-node

options: >-

--health-cmd "curl http://localhost:9200/_cluster/health"

--health-interval 10s

--health-timeout 5s

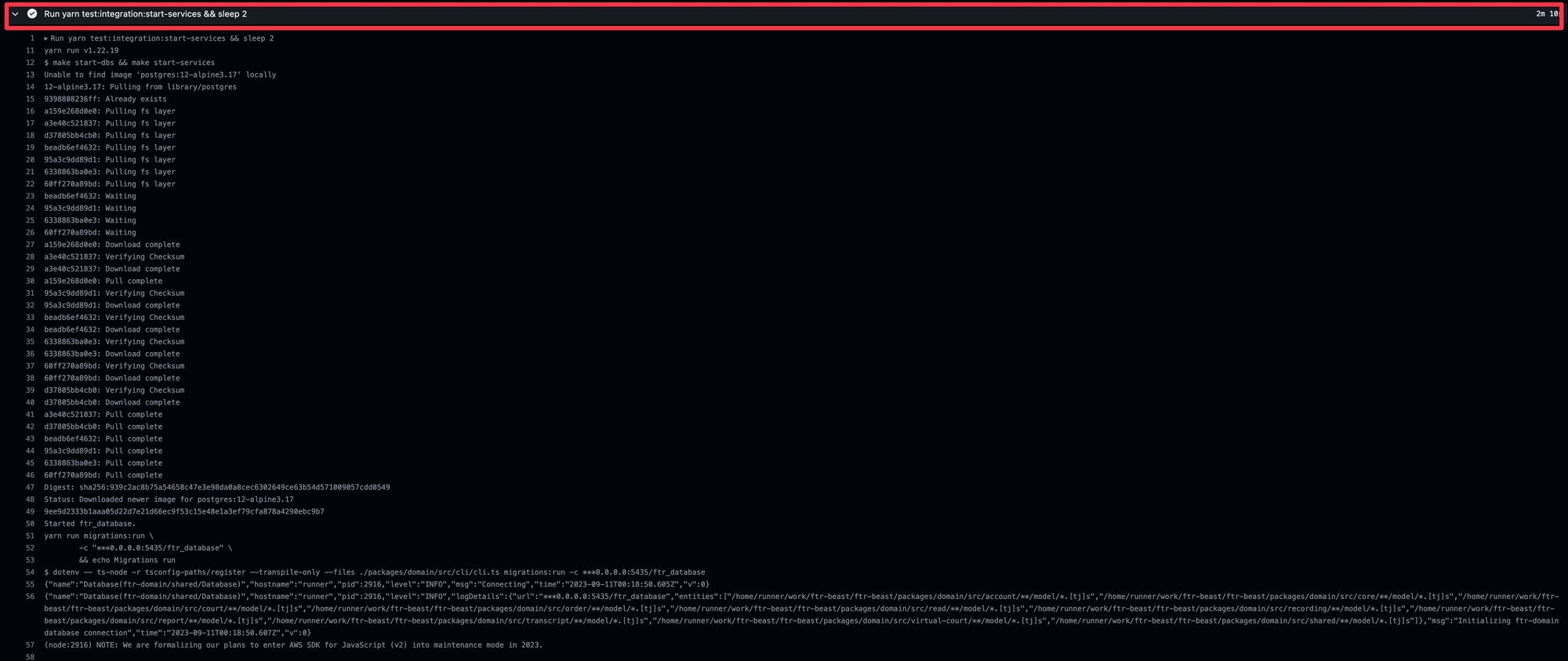

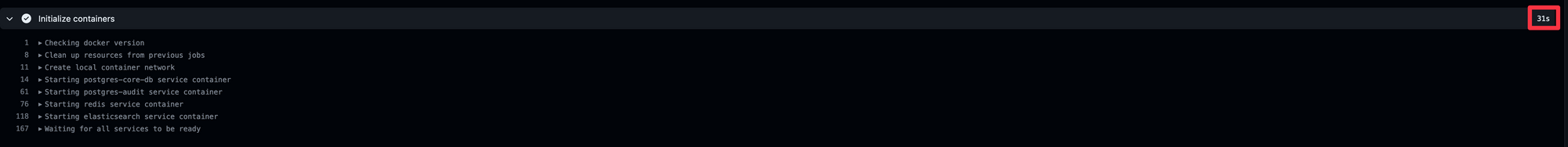

--health-retries 10- Cost/Time savings - I found they cut build times by around a minute

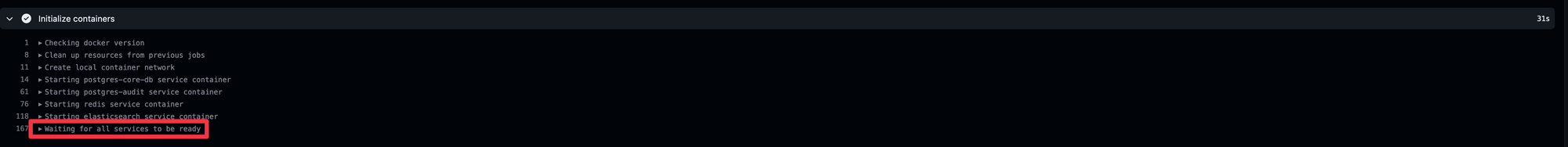

Before (running docker build):

After:

- Faster than utilizing docker layer caching.

I found using GitHub Actions Service Containers faster/more efficient than creating a job or docker build and then utilizing docker layer caching, such that subsequent jobs only need to fetch from the cache.

The reason being reading docker layers from the cache was far slower than the process GitHub Action Service Containers go through (sidenote: GitHub don't charge for ingress).

- Less set up for individual tests.

If you're running your integration tests in a matrix/parallel each individual test needs to set up services individually (added bonus: each test runs in their own runner so we don't have to worry about memory - we can retry "just" the test that failed if we have flakey tests too).

If this only takes a minute for each runner instead of 2 - we get compounded time saved and when running your integration tests in parallel we're only as slow as our slowest test - so we save on total wait time.

When You Might Not Want To Use Them:

- Disparate testing processes between your build environment and local development environment

Using a docker-compose.yml is same experience both locally and CI, we hard code versions and tags in our GitHub action workflow files so they can become out of date/ might be a gotcha.

Could be negated if run your Github Actions locally using nektos/act if you want local verification - but not always desirable.

- Two docker versions to keep track of - Local and GitHub Action workflow

Again - Could be negated if run your Github Actions locally using nektos/act if you want local verification - but not always desirable

- Larger/messier GitHub action workflows files.

We can go from one single neat command to set everything up (i.e one single command which invokes all the necessary start up scripts and post health checks) to an inflated number of lines specifying the service definitions.

Summary

I've found GitHub Actions Service Containers to cut down service set up by around 50% in all of my cases. For situations where you need to run your tests in parallel and each task runner has to set up their own services - the pros for using services containers outweight the cons.

The major cost in my opinion is having duplicate docker image definitions between local and in CI that must be manually synced.

We should be striving for faster build times and developer experience. Slow integration tests in CI are more common than you think. Running them in parallel means tests run in their own environment, ease of visibility in GitHub - as opposed to all tests running in on one runner and just seeing a sprawl of console logs and finally allow developers to easily retry just the test that failed as opposed to the entire suite again.